Friday, May 16, 2025, 5:15 – 7:15 pm, Talk Room 2

Organizers: Preeti Verghese1, Marisa Carrasco2, David Melcher3 (1Smith-Kettlewell Eye Research Institute, 2New York University, 3New York University, Abu Dhabi)

Speakers: Preeti Verghese, Marisa Carrasco, Mike Landy, Barbara Anne Dosher, David Melcher, Mary Hayhoe, Rich Krauzlis, Jie Z. Wang, Jacob Feldman

Introduction: 5:15 pm

Preeti Verghese1, Marisa Carrasco2; 1Smith-Kettlewell Eye Research Institute, 2New York University

Eileen & VSS: 5:20 pm

Michael Landy, New York University

Talk 1: 5:25 pm

“Cogito Ergo Moveo”—The role of cognition and attention in eye movements in the work of Eileen Kowler

Barbara Anne Dosher, University of California, Irvine

“I think therefore I move”, she titled one review paper (Kowler, 1996). One key strand of Eileen Kowler’s research revealed how attention and expectation engage eye movements to serve the needs of vision. Her experimental interventions expanded models of the control of eye movements beyond early models that “assume[d] that eye movements are driven by low-level sensory signals, such as retinal image position or retinal motion”. She investigated how higher-level cognitive knowledge and goals influence behavior to optimize information acquisition by the eye. Key examples of this work include the role of attention and selection in smooth pursuit, the interaction of attention and perception in single eye movements, and the dynamics of attention used to guide sequences of eye movements. This talk considers some of these findings.

Talk 2: 5:40 pm

What visual representation guides saccades? Reflections on “Shapes, Surfaces and Saccades” (Melcher & Kowler, 1999)

David Melcher, New York University, Abu Dhabi

Back when eye tracking required an entire room full of machinery, pioneering research on the oculomotor system investigated fixational and saccadic eye movements for simple targets, like fixation points, crosses or disks. A series of studies in the 1990’s indicated that, for simple outline shapes, saccades landed near the center-of-gravity. These studies had suggested a relatively primitive representation of the visual target, prior to the linking of elements into contours and shapes. In a series of six experiments, we showed that the saccadic landing position was predicted by the center-of-area of a surface defined by the shape boundary. This finding followed a line of Eileen Kowler’s research showing that eye movements are not merely reflexive, but instead reflect complex visual and cognitive processing. As research has now progressed into the 21st century, key ideas from this 1999 paper have been expanded into studies of natural and 3D scene perception, trans-saccadic object feature prediction, ensemble processing, smooth pursuit, and grasping movements, among other topics. Still, there remain fundamental questions about how sensory and motor systems interact, and to what extent the oculomotor system reflects, and differs from, our conscious visual perceptual experience.

Talk 3: 5:55 pm

Understanding Natural Vision

Mary Hayhoe, University of Texas, Austin

At a time when much eye movement research was dominated by a stimulus driven, linear systems approach, Eileen Kowler demonstrated that eye movement control is intrinsically connected to a range of cognitive processes such as attention, memory, prediction, planning, and scene understanding. She also understood that this is a natural consequence of the fact that eye movements are embedded in ongoing actions, and argued for measuring eye movements in the context of unconstrained behavior. As the eye and body tracking technology have developed, we can measure the operation of these cognitive processes in more diverse contexts, and this has allowed a more unified view of visuo-motor control. If we assume that the job of vision is to provide information for selecting suitable actions, we can view gaze control as part of complex sequential decision processes in the service of goal-directed behavior. In natural behavior, even the simplest actions involve both long and short-term memory, evaluation of sensory and motor uncertainties and costs, and planning that takes place over time scales of seconds in the context of action sequences. Consequently, a decision theoretic context allows a more coordinated approach to understanding natural visually guided behavior.

Talk 4: 6:10 pm

Opening the window from eye movements to cognitive expectations and visual perception

Rich Krauzlis, Laboratory of Sensorimotor Research, National Eye Institute

There was a time not so long ago when eye movements were not widely appreciated as providing windows into visual cognition and perception. Instead, they were viewed mostly as motor reactions to visual “error” signals. This engineering perspective was spectacularly successful in ferreting out the basic principles for smooth pursuit and other eye movements, but it did not easily accommodate non-sensory and non-motor factors. Against this backdrop, Eileen made a series of seminal observations starting with her thesis work, showing that cognitive expectations exert strong influences on smooth pursuit eye movements. Her experimental designs were wonderfully creative and established that there is much more going on in the pursuit system than can be found slipping across the retina. Her results sparked controversy at the time, but her conclusions are now broadly accepted: smooth pursuit is guided not only by low-level visual inputs, but also by higher-level visual processes related to expectations, memory, and cognition. These conclusions now seem almost self-evident, but in fact they took a great deal of perseverance and ingenuity. Eileen should be lauded not only for the significance of her scientific accomplishments, but also for the example she provided of an independent and courageous intellect.

Talk 5: 6:25 pm

Predictive smooth pursuit eye movements reflect knowledge of Newtonian mechanics

Jie Z. Wang1, Abdul-Rahim Deeb2, Fulvio Domini3, Eileen Kowler4

1 University of Rochester, 2 John Hopkins University, 3 Brown University, 4 Rutgers University-New Brunswick

Smooth pursuit employs a variety of cues to predict the future motion of a moving target, enabling timely and accurate tracking. Since real-world motions often obey the Newtonian mechanics, an implicit understanding of these laws should be a particularly effective cue for facilitating anticipation in pursuit. In this study, we focus on understanding how 2-D smooth pursuit incorporates Newtonian mechanics to interpret and predict future motion. We examined the tracking of a “target object” whose motion path appeared to be due to a collision with a moving “launcher object”. The direction of post-collision target motion was either consistent with or deviated from the Newtonian prediction. Newtonian and non-Newtonian paths were run in separate blocks allowing observers the opportunity to predict and learn the target’s path based on the launcher’s movement. Anticipatory pursuit was found to be faster and more precise when post-collision paths conformed to predictions of Newtonian mechanics. Even when there was ample opportunity to learn the non-Newtonian motion paths, there was evidence of a bias in the direction of the Newtonian prediction. These findings support the idea that smooth pursuit can leverage the regularities in everyday physical events to formulate predictions about future motion. These predictive capabilities of smooth pursuit result in increased compatibility with natural motions and thereby allow for more accurate and efficient tracking of real-world movements.

Talk 6: 6:40 pm

Decisions and eye movements in a dynamic naturalistic VR task (response to Kowler, 1995, personal communication)

Jacob Feldman, Rutgers University

(Joint work with Jakub Suchojad, Sam Sohn, Michelle Shlivko, and Karin Stromswold)

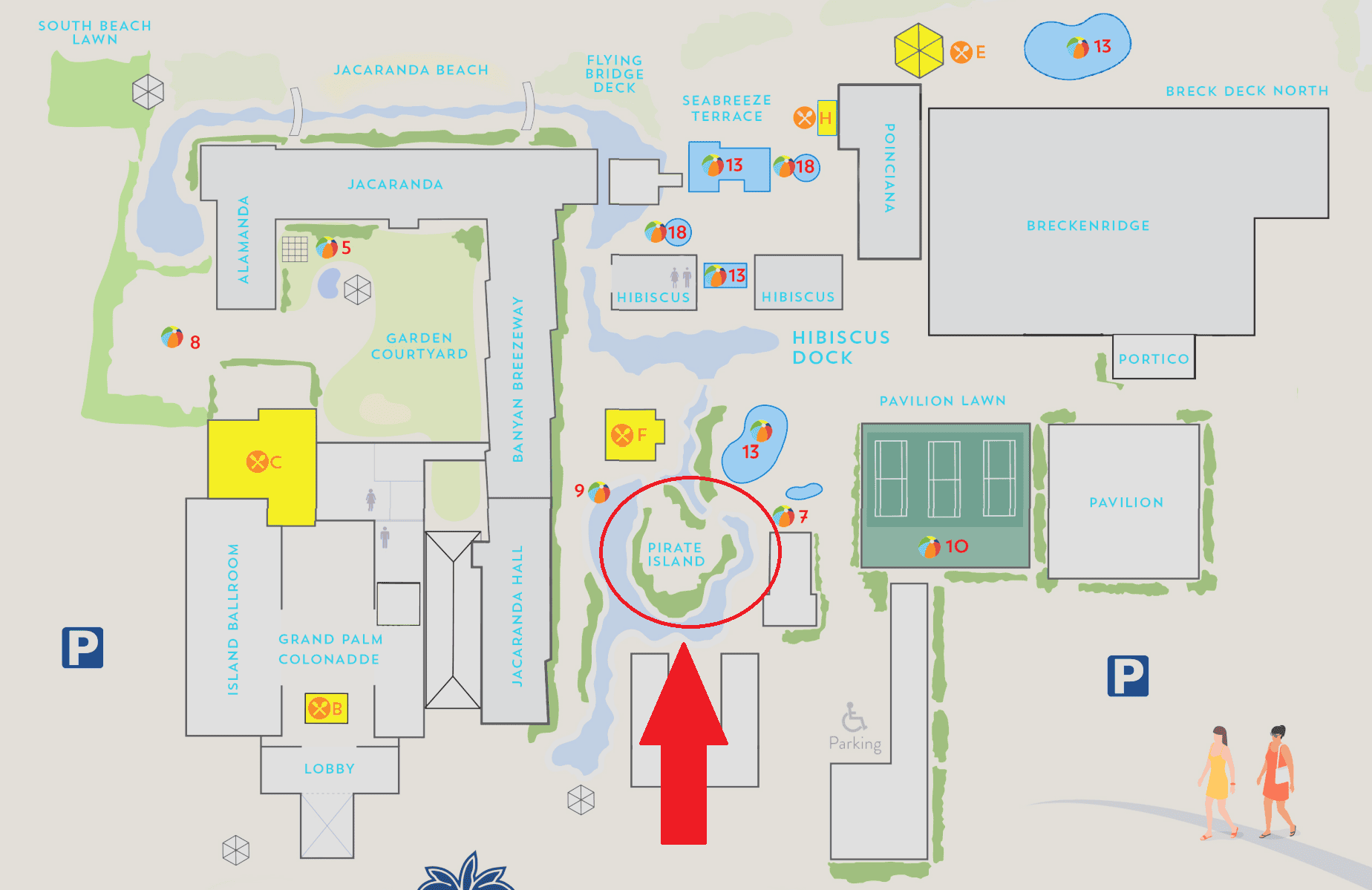

One of the main goals of cognitive research, continually emphasized by Eileen Kowler, is to understand behavior in realistic, natural contexts. In this talk I’ll talk about a ubiquitous natural task that we have recently studied in virtual reality (VR): social wayfinding. Social wayfinding refers to the way people navigate through environments that contain other people, like a crowded train station. In addition to various generic motivations, like the desire to minimize time and energy expended, this task involves a number of specifically social goals, like avoiding colliding with or rudely cutting off other people. We have been studying this problem in VR, asking our subjects to navigate around both static obstacles (e.g. couches) and dynamic ones (e.g. people walking around). We have also been collecting eye movements so as to better understand how subjects handle the very complex series of decisions they need to make as they move through the environment. Broadly speaking, we find that their eye movements reflect the hierarchical nature of the task, sometimes fixating on “local” obstacles and at other times on “global” features such as the target gate. I’ll end by commenting on how this work addresses (and also fails to address) a question that Eileen posed to me many years ago.